What is Latency?

Latency is defined as the measure of time delay experienced in a system. It represents the time interval between the initiation of an event and the moment its effect is observed.

Specifically in computing and networking, there are three kinds of latency:

- Network Latency: The time it takes for data to travel across a network from the source to the destination. It is typically measured in milliseconds (ms).

- Disk Latency: The time required for a computer to retrieve data from its storage device.

- System Latency: The total delay from the input action (like pressing a key) to the output result (like the character appearing on the screen).

This blog specifically focuses on Network Latency.

Why does Latency Matter?

Latency matters in networking because it directly affects the speed and quality of data transmission between devices and users.

High latency can lead to noticeable delays in loading web pages, buffering in streaming services, and lag in online gaming, degrading the overall user experience.

In real-time applications such as video conferencing and VoIP calls, low latency is essential to ensure smooth, uninterrupted communication without delays or echoes.

Additionally, in enterprise environments, low network latency is crucial for the efficient operation of cloud services, data synchronization, and real-time data analytics, which are vital for business productivity and competitiveness.

Which Applications or Industries Does High Latency Impact the Most?

Latency is overall a major problem for most industries. However, the following industries are impacted the most:

Online gaming: High latency causes lag, which disrupts gameplay and can result in a poor user experience, negatively affecting competitive gaming performance.

E-commerce: High latency can cause slow page loads, frustrating users and leading to abandoned shopping carts. Slow performance negatively impacts conversion rates and customer satisfaction, driving users to faster competitors.

Travel Websites: High latency delays search results and booking processes, leading to user frustration and potential abandonment. Slow response times can reduce user satisfaction and loyalty, prompting customers to choose more efficient travel platforms.

Video Conferencing and VoIP: High latency leads to delays, echoes, and interruptions in conversations, making it difficult to communicate effectively in real-time.

Financial Trading: In high-frequency trading, even milliseconds of delay can impact financial outcomes, causing missed opportunities and significant financial losses.

What causes Network Latency?

Network latency is caused by several factors including:

Distance: The physical distance that data must travel between source and destination affects latency. Longer distances mean longer travel times.

Congestion: High traffic on the network can cause congestion, leading to delays as data packets wait to be transmitted.

Propagation Delay: The time it takes for a signal to travel through a medium (fiber optics, copper cables, etc.) contributes to latency. Different media have different speeds.

Routing and Switching: Each router and switch that data passes through adds processing time, increasing overall latency.

Packet Loss and Retransmission: When packets are lost in transit, they must be retransmitted, causing delays.

Bandwidth: Limited bandwidth can slow down data transmission, increasing latency, especially during peak usage times.

Protocol Overhead: The processing required for various network protocols (like TCP/IP) adds to latency, as data packets need to be constructed, sent, received, and confirmed.

Quality of Service (QoS) Mechanisms: Some networks prioritize certain types of traffic, which can delay the transmission of lower-priority data.

Packet Inspection by Perimeter Security Solutions: Perimeter security solutions such as Firewalls, Next Gen WAFs, WAAP and others perform deep packet inspection to detect and block malicious traffic. These comprehensive security checks involve multiple layers of analysis and filtering, which can result in additional latency as each request is processed.

Latency vs Bandwidth vs Throughput – What is the Difference?

People often confuse latency, bandwidth, and throughput because they all relate to network performance but describe different aspects of it.

Latency refers to the time delay in data transmission, bandwidth is the maximum data transfer capacity of a network, and throughput is the actual rate at which data is transferred.

These terms are interconnected and can affect each other, leading to misunderstandings.

For example, a high-bandwidth connection may still feel slow if latency is high, and marketing often focuses on bandwidth, overshadowing the importance of latency and throughput.

This overlap and the technical nature of these concepts contribute to the confusion.

| Aspect | Latency | Bandwidth | Throughput |

| Definition | Time delay between request and response | Maximum data transfer rate | Actual data transfer rate |

| Measurement | Milliseconds (ms) | Bits per second (bps), Mbps, Gbps | Bits per second (bps) |

| Impact | Affects response time and speed | Indicates capacity of the network | Reflects real-world network performance |

| Perception | Experienced as lag or delay | Marketed as internet speed | Observed as download/upload speed |

| Example | Delay in the video call | ISP’s advertised internet speed | Speed of downloading a file |

Understanding these differences helps to diagnose network issues more accurately and makes it easier to communicate specific problems to service providers or technical support.

How is Latency Measured?

Latency is typically measured in milliseconds (ms) and can be assessed using various methods depending on the context. One common way to measure latency is through a ping test, which sends a small packet of data from one device to another and measures the round-trip time for the data to travel between them.

For example, let’s say you want to measure the latency between your computer and a remote server. You can use the ping command in the command prompt or terminal:

ping www.example.com

After executing this command, your computer sends a small packet of data to the specified server (www.example.com). The server then receives the packet, processes it, and sends it back to your computer.

The ping command measures the round-trip time, which includes the time it takes for the packet to travel from your computer to the server (the outbound latency) and the time it takes for the response to travel back from the server to your computer (the inbound latency).

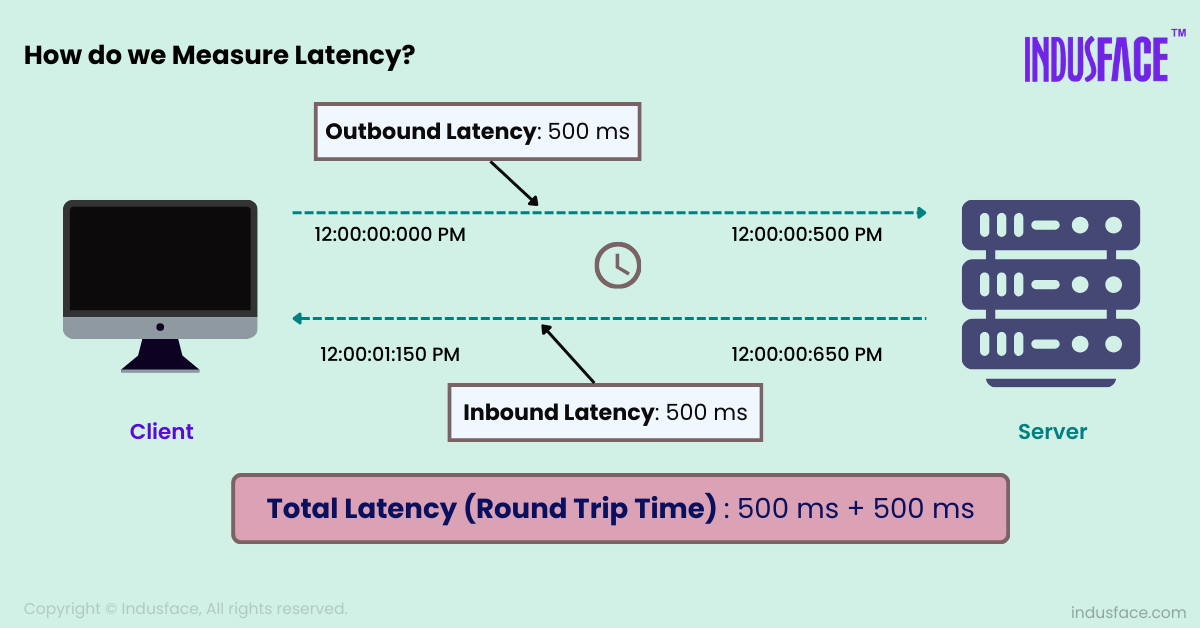

Here’s an example of latency calculation:

- Timestamp when the request is sent: 12:00:00.000 PM

- Timestamp when the request is received at the server: 12:00:00.500 PM (500 milliseconds after the request was sent)

- Timestamp when the response is sent from the server: 12:00:00.650 PM (150 ms after the request was received at the server)

- Timestamp when the response is received at your computer: 12:00:01.150 PM (500 milliseconds after the response was sent from the server)

Now, let’s calculate the round-trip time (latency) in milliseconds:

Outbound Latency (Time taken for the request to reach the server):

12:00:00.500 PM (Received at the server) - 12:00:00.000 PM (Sent from your computer) = 500 milliseconds

Inbound Latency (Time taken for the response to return to your computer):

12:00:01.150 PM (Received at your computer) - 12:00:00.650 PM (Sent from the server) = 500 milliseconds

Total Latency (Round-trip time):

Outbound Latency + Inbound Latency = 500 milliseconds + 500 milliseconds = 1000 milliseconds (or 1 second)

When you use a perimeter security solution such as a WAAP, keeping track of both inbound and outbound latency is critical, since high latency could negatively impact your business. Latency can be measured as the time added to the complete roundtrip.

Even within the above, the difference between the time that the response was sent from the server (12:00:00.650) and the time that the request was received at the server(12:00:00.500 PM) determines the application performance. This is also called server latency.

When debugging latency, it is important to understand if it is because of Network latency or server latency.

What is a Good Latency?

For real-time applications like online gaming, video conferencing, and financial trading, low latency is critical to ensure smooth, responsive interactions. In these cases, server latencies of tens or hundreds of milliseconds (below 100) are often targeted.

For general web applications, such as e-commerce sites, social media platforms, and content delivery networks (CDNs), server latencies of a few hundred milliseconds (under 500ms) are typically considered acceptable. However, lower latency is always preferable to provide a more seamless user experience.

How can Latency be Reduced?

Broadly speaking, the three components that impact latency are 1) application architecture 2) Network distance, and 3) Edge security solutions.

Application architecture components including application code, database design, API design, server configuration, caching strategies, and others have a major impact on server latency. Ensure that all these components are optimized by regularly monitoring the server latency and benchmarking against the competition and other internet-facing applications.

Network distance also plays a major part as latency is directly proportional to the distance between the user and the origin server. For example, if your origin servers are in the US and your user is in Asia, it is a given that it’ll take longer for the user to receive a response.

The ideal way to reduce network latency would be to ensure that your origin server is closest to your users.

That said, if you run a global business with users across the world, it will not be possible to have code closer to all users, here solutions like CDN help applications perform faster by serving cached content from the closest POP of the user.

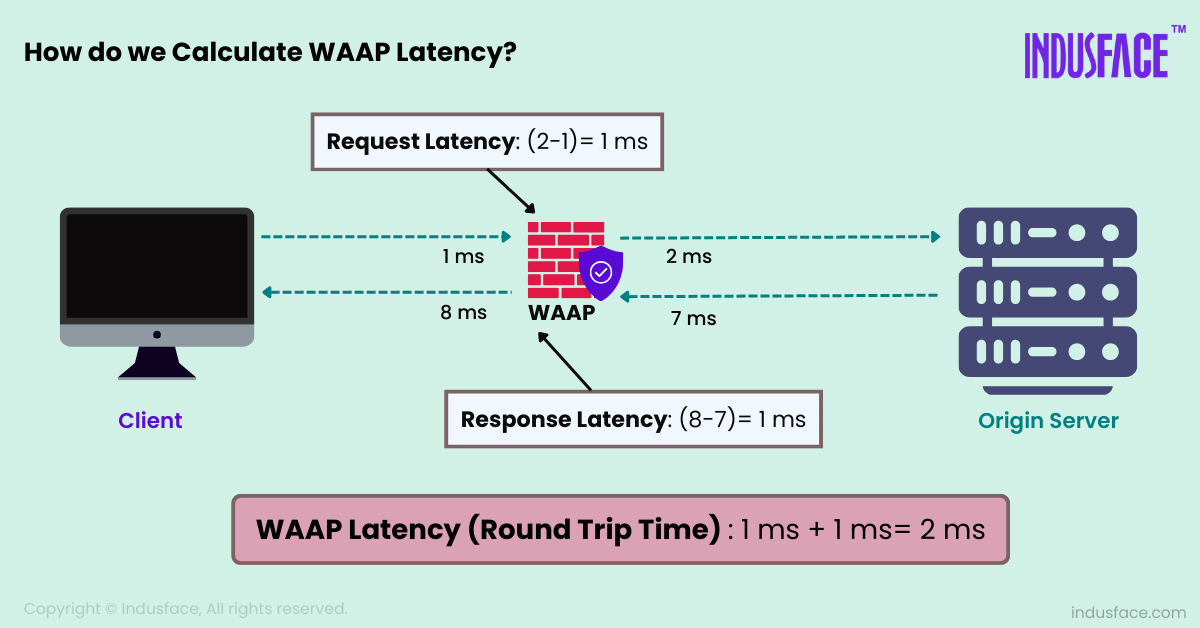

Now coming to edge security solutions such as WAF and WAAP, ensure that you have an accurate measurement of the following:

- Time when the request is received by WAF/WAAP.

- Time when the request is sent to Origin from WAAP.

- Time when the response is received by WAF/WAAP.

- Time when response is sent by WAF/WAAP to the user.

Then calculate the WAAP latency, by (2-1) + (4-3). (2-1) gives the request latency and (4-3) gives the response latency.

Once you understand this figure, it is easy to debug whether the latency is caused by the perimeter security solution.

If that is the case, understanding which security policies are causing the latency and taking corrective measures is the next step.

How does AppTrana WAAP Reduce Latency?

AppTrana WAAP ensures that added latency is kept to a minimum. It includes a fully managed CDN with caching policies optimized by AppTrana’s performance engineers, ensuring cached content is delivered from the nearest POP (Points of Presence).

AppTrana has 400+ CDN pops all over the world ensuring the best possible performance for the application. AppTrana team also helps you cache dynamic content when possible.

Non-cached traffic is inspected by AppTrana’s WAAP nodes to block malicious content, ensuring only clean traffic reaches the server. To reduce latency, traffic is inspected by WAAP nodes closest to the origin. AppTrana has WAAP nodes in 5 regions globally, allowing applications with multiple origins to be served by nearby WAAP nodes.

With all the above measures, AppTrana ensures that its latency added by WAAP is < 10 ms. In many cases with CDN, AppTrana even improves site performance.