What is Load Balancing?

Load balancing is the process of distributing network traffic or computing workloads across multiple servers to prevent any single server from being overwhelmed. This improves speed, reduces latency and ensures applications stay available, even during peak traffic or server failures.

It is a core part of most internet applications. By spreading out user requests, load balancing avoids bottlenecks, keeps systems responsive, and adds a layer of fault tolerance.

In a web application context, load balancers act as intermediaries between clients and servers, handling the task of routing requests to the appropriate backend servers based on factors such as server health, traffic volume, or even geographic location.

How Load Balancing Works

Load balancing is carried out by a component known as a load balancer, which can be hardware-based, software-based, or cloud-native. Its job is to distribute incoming client requests or data evenly across multiple backend servers, so no single server is overloaded.

When a user request is made, such as visiting a website or calling an API, the load balancer acts as the first point of contact. It evaluates where to route the request based on current server load, availability, and a chosen load balancing algorithm. These algorithms may follow static rules (like round-robin) or dynamic ones (like least connections or fastest response time).

Depending on its configuration, a load balancer can operate at various layers of the OSI model. At Layer 4 (Transport Layer), it makes decisions based on IP address and TCP/UDP ports. At Layer 7 (Application Layer), it can inspect HTTP headers, URLs, or cookies to make more context-aware decisions.

By efficiently routing traffic, load balancers reduce latency, improve fault tolerance, and ensure users get consistent and uninterrupted access, even when individual servers are down or overwhelmed.

5 Types of Load Balancing

The most common types are:

1. Static Load Balancing

Uses predefined rules (e.g., round robin) that do not adapt to current load conditions. Simple, but not ideal for unpredictable traffic. It works best in environments where server performance and traffic patterns are consistent and predictable.

2. Dynamic Load Balancing

Adjusts routing decisions in real-time based on server health, current load, or response time. Ensures more reliable performance. This approach uses adaptive algorithms like least connections or response-time-based routing to make intelligent decisions on the fly.

3. Global Load Balancing

Distributes traffic across multiple geographic regions or data centers, optimizing latency and availability for global users. It also provides fault tolerance by rerouting traffic during regional outages or performance degradation.

4. Client-Side Load Balancing

Used in microservices, where the client is aware of multiple service instances and picks one based on internal logic.

5. Cloud Load Balancing

Offered by providers like AWS, Azure, or GCP. These services dynamically scale and distribute traffic without manual intervention.

Whether static or dynamic, local or global, load balancing strategies must align with your application architecture and user distribution.

Common Load Balancing Algorithms

Load balancing effectiveness depends on how requests are assigned to backend resources. These algorithms define that logic:

Round Robin

Cycles through a list of servers in a fixed sequence, sending each new request to the next one in line. It’s ideal for evenly sized servers but can struggle when workloads vary across instances.

Least Connections

Routes traffic to the server with the fewest active client connections. This works well for applications with uneven or long-lived sessions, helping avoid overloading busy nodes.

Weighted Round Robin

Enhances round robin by assigning weights to servers based on their processing capacity. Servers with higher weights receive more traffic, making it suitable for mixed-capacity environments.

IP Hashing

Generates a unique hash from the client’s IP address to determine which server handles the request. This ensures session persistence by directing repeated requests from the same user to the same server.

Random Selection

Selects a backend server at random for each new request. It’s simple and fast, but less efficient under heavy or skewed traffic unless combined with other optimization logic.

Benefits of Load Balancing

1. Improved Scalability

New servers can be added seamlessly as traffic grows, ensuring consistent performance without changing the client-side logic or application interface.

2. High Availability

If one server fails, requests are automatically redirected to healthy servers. This minimizes service disruption and supports fault tolerance across systems.

3. Optimized Resource Use

By evenly distributing requests, load balancers prevent resource hogging, allowing better CPU and memory usage across all servers in the pool.

4. Faster Response Times

Traffic is sent to the least busy or geographically closest server, reducing latency and delivering faster load times for users worldwide.

5. Enhanced Security

Load balancers act as gatekeepers, hiding backend servers and integrating with tools like SSL/TLS, Web Application Firewalls and DDoS protection mechanisms.

Load Balancing in Web Application Security

Load balancing isn’t just for speed; it’s a core component of secure application design:

Hides Internal Infrastructure

Clients only interact with the load balancer, not the backend IPs or server details, adding an obfuscation layer that deters targeted attacks. Without access to backend infrastructure, attackers are left with fewer entry points, significantly reducing the risk of targeted attacks.

See how a reverse proxy also acts as a load balancer

DDoS Mitigation

One of the most common and disruptive attacks in the modern threat landscape is the DDoS attack. During a DDoS attack, attackers flood a server with massive amounts of traffic, overwhelming the system and making it unavailable to legitimate users.

Here, load balancing becomes a critical defense mechanism. When integrated with auto-scaling capabilities, load balancers can automatically handle surges in traffic, scaling horizontally by adding new servers or vertically by increasing the capacity of existing ones. This prevents the servers from becoming overwhelmed by malicious traffic.

Centralized TLS Termination

Load balancers play a key role in Centralized TLS Termination by handling SSL/TLS decryption at the edge, before traffic reaches backend servers. This offloads the computational burden from individual servers, improves performance, and simplifies certificate management. By centralizing TLS at the load balancer, organizations can enforce consistent security policies and reduce complexity across the infrastructure

Challenges in Load Balancing

Despite its strengths, load balancing isn’t without complications:

Session Persistence

If a user’s session is not pinned to a specific server (via sticky sessions or tokens), they may lose data or be forced to re-authenticate when switched between servers.

Configuration Complexity

Setting up routing rules, managing SSL certificates, and defining health checks can get complex, especially for dynamic or hybrid environments.

Single Point of Failure

If the load balancer itself crashes and lacks redundancy, it can take down the entire application. High availability setups with multiple nodes are essential.

Cost & Infrastructure Overhead

Enterprise-grade load balancers or cloud-based options with autoscaling features can get expensive. Poor planning can lead to underutilization or excessive spend.

4 Best Practices for Load Balancing in Web Application Security

Here are some best practices for using load balancing in web application security:

1. Use Auto-Scaling

Ensure that your load balancer is integrated with auto-scaling capabilities. This will allow your application to automatically scale up in response to increased traffic, ensuring high availability during traffic spikes, such as DDoS attacks.

2. Enable Geo-Load Balancing

For global applications, use geo-load balancing to route traffic based on the geographic location of the client. This reduces latency and optimizes the user experience while keeping the application secure by preventing large-scale traffic from overwhelming a single region.

3. Monitor and Adjust

Regularly monitor traffic and performance metrics to ensure your load balancing system is working as expected. Adjust the configuration of your load balancer and scaling policies based on these insights.

4. Integrate with WAF

Integrate your load balancer with a Web Application Firewall (WAF). A WAF can help protect your application from common web attacks (like SQL injection, XSS, etc.) by filtering out malicious traffic before it reaches your servers.

How AppTrana Ensures Load Balancing and High Availability

AppTranais architected for high performance, scalability, and reliability through its intelligent load balancing framework, which is deeply integrated across its global security and delivery infrastructure.

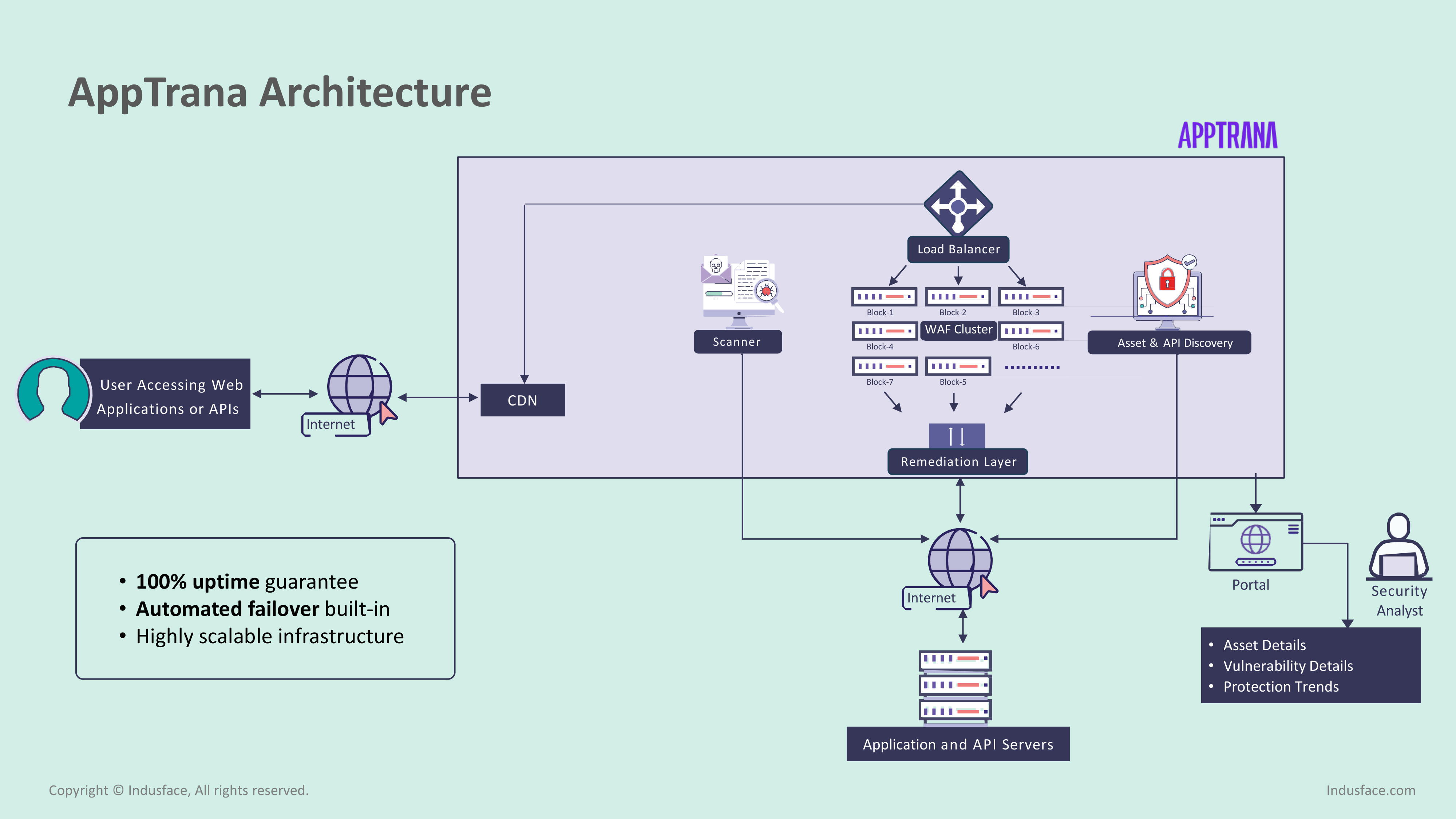

At the core, AppTrana uses a globally distributed, multi-region setup with multiple Availability Zones (AZs) and edge locations. This ensures traffic is intelligently routed and balanced based on real-time load conditions, geographic proximity, and server health. Legitimate requests—whether from browsers, apps, or API gateways—are first received at AppTrana’s multi-layered edge network, where DNS-based and application-level load balancing ensure optimized distribution across WAF clusters. (see architecture diagram).

As shown in the diagram, the load balancer sits in front of the WAF cluster, managing traffic across multiple WAF instances. This not only improves response times but also ensures seamless failover in case of regional or instance-level disruptions. Combined with AppTrana’s integrated CDN and edge caching, the load balancer ensures minimal latency for end users while maximizing system resilience.

How AppTrana Prevents Common Load Balancing Challenges

Traditional load balancers often face issues like session loss, complex configurations, single points of failure, and cost inefficiencies. AppTrana’s cloud-native, fully managed approach is purpose-built to eliminate these pain points:

- Built-in session persistence: Sticky sessions and intelligent routing ensure seamless user experiences without reauthentication or session loss.

- Zero configuration complexity: SSL certificates, health checks, and routing logic are fully managed and pre-configured by Indusface—no manual setup required.

- High availability by design: Redundancy across multiple Availability Zones eliminates single points of failure and ensures uninterrupted application access.

- Transparent, usage-based pricing: Customers are only billed for clean traffic, avoiding hidden charges and reducing infrastructure overhead.

- Horizontal scalability: Additional WAF nodes can be dynamically added to handle load spikes or traffic surges.

- Integrated security and delivery: Load balancing works in tandem with DDoS protection, bot mitigation, and edge caching to deliver both performance and protection.

By combining intelligent traffic distribution with deep security enforcement and edge delivery, AppTrana delivers a 100% uptime guarantee and uninterrupted access to web applications and APIs—even during large-scale attack scenarios or infrastructure failures.